Workflows

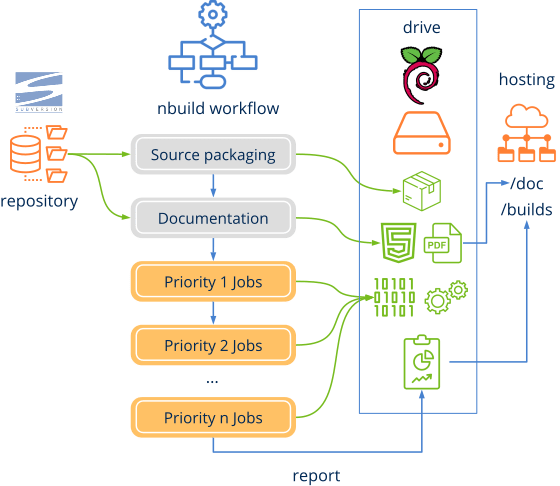

nbuild, executes a fixed function pipeline (Figure 1), where there are some mandatory and some optional steps. That is configured by the second parameter of the nbuild -w workflow.json command (Listing 1).

workflow.json file.

1 2 3 4 5 6 7 8 9 10 11 |

{

"global" : { ... },

"repo" : { ... },

"doc" : { ... },

"hosting" : { ... },

"version" : "prj/version.txt",

"build" : "prj/build.txt",

"sources" : { ... },

"tests" : { ... },

"jobs" : { ... }

}

|

1. Globals

This section of the workflow.json contains the main project and flow data:

1 2 3 4 5 6 7 8 9 10 |

"globals" : {

"project" : "NAppGUI",

"description" : "Cross-platform C SDK",

"start_year" : 2015,

"author" : "Francisco Garcia Collado",

"flowid" : "nappgui_src",

"license" : [

"MIT Licence",

"https://nappgui.com/en/legal/license.html" ],

}

|

flowid will be used to create the directory structure in drive and host that will house the artifacts and temporary files of this particular flow. The rest will be used for logging and documentation tasks. For example, when adding legal information in each source code file.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 |

/* * NAppGUI Cross-platform C SDK * 2015-2025 Francisco Garcia Collado * MIT Licence * https://nappgui.com/en/legal/license.html * * File: stream.h * https://nappgui.com/en/core/stream.html * */ /* Data streams */ #include "core.hxx" |

2. Repository

Essential section indicating the access to the repository where the code is hosted (Listing 3).

1 2 3 4 5 |

"repo" : {

"url" : "svn://192.168.1.2/svn/NAPPGUI/trunk",

"user" : "user",

"pass" : "pass"

}

|

Initially, only Subversion repository support is included. Git and local file systems are planned to be added.

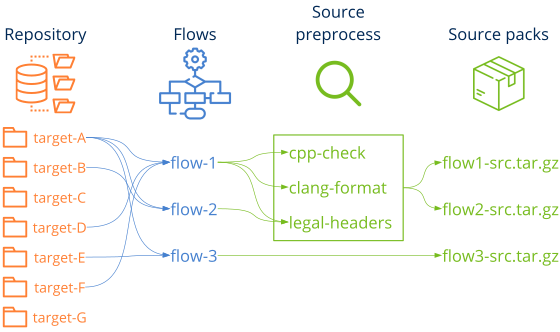

3. Source code

Not all the code in the repository may be necessary for the flow we are dealing with (Listing 4) (Figure 2). It may contain code from other projects or unnecessary files, so a prior selection and packaging must be performed. The first step will be to generate a src.vers.tar.gz with the relevant code for the flow. This package will be sent to the compilers through the node network.

1 2 3 4 5 6 7 8 9 10 11 12 |

"sources" : [

{"name" : "src/sewer", "legal" : true, "analyzer" : true },

{"name" : "src/osbs", "legal" : true, "analyzer" : true },

{"name" : "src/core", "legal" : true, "analyzer" : true },

{"name" : "src/geom2d", "legal" : true, "analyzer" : true },

{"name" : "src/draw2d", "legal" : true, "analyzer" : true },

{"name" : "prj", "format" : false },

{"name" : "cicd/nappgui_src/CMakeTargets.cmake", "dest" : "CMakeTargets.cmake" },

...

{"name" : "CMakeLists.txt" },

{"name" : ".clang-format" }

]

|

name: Name of the directory in the repository.dest: Name of the directory in the package (optional).legal: Iftrue, a header with the project's legal information will be added to each detected source code file (*.c, *.h, *.cpp, etc).format: Iftrueand a.clang-formatexists, source code files (*.c, *.h, *.cpp, etc) will be formatted.analyzer: Iftrue, a static code analyzer will be run for each source code file.

Important:

- The code package to be compiled is a subset of the repository.

- No network node (runner) will have access to the repository, only the master node.

- The code is packaged in a

src.vers.tar.gzfile and will be stored on drive, as another artifact. - The packaging process performs "pre-processing" of the files, such as formatting them, including legal information or running static parsers.

- The generated package must have a

CMakeLists.txtto compile the code and install the binaries and headers.

4. Test code

It is an optional process similar to source code packaging, it will generate the test package in test.vers.tar.gz (Listing 5). If no tests are specified, the flow will only compile the code, but will not test it.

exec: Executable command to launch the test.

1 2 3 4 5 6 7 8 9 10 11 12 13 |

"tests" : [

{"name" : "test/tlib", "dest" : "tlib" },

{"name" : "test/data", "dest" : "data" },

{"name" : "test/dylib", "dest" : "dylib" },

{"name" : "test/sewer", "dest" : "sewer", "exec" : "sewer_test" },

{"name" : "test/osbs", "dest" : "osbs", "exec" : "osbs_test" },

{"name" : "test/core", "dest" : "core", "exec" : "core_test" },

{"name" : "test/draw2d", "dest" : "draw2d", "exec" : "draw2d_test" },

{"name" : "test/encode", "dest" : "encode" , "exec" : "encode_test"},

{"name" : "test/inet", "dest" : "inet" , "exec" : "inet_test"},

{"name" : "test/CMakeLists.txt", "dest" : "CMakeLists.txt" }

],

]

|

5. Documentation

Optionally, the flow allows the generation of project documentation, using ndoc (Listing 6). This tool is part of the nbuild project and allows to generate static web sites in different languages, as well as PDF ebooks, based on the LaTeX text composer.

1 2 3 4 5 |

"doc" : {

"url" : "svn://192.168.1.2/svn/NAPPGUI/trunk/doc",

"user" : "user",

"pass" : "pass"

}

|

Examples generated with ndoc, we have them is:

6. Hosting

Optionally, nbuild can publish both project documentation and build reports to an external web server. This will allow access to any type of report from anywhere, outside the internal development network.

1 2 3 4 5 6 7 |

"hosting" : {

"url" : "hosting_url",

"user" : "user",

"pass" : "pass",

"docpath" : "./web/docs",

"buildpath" : "./web/builds"

}

|

7. Compilation jobs

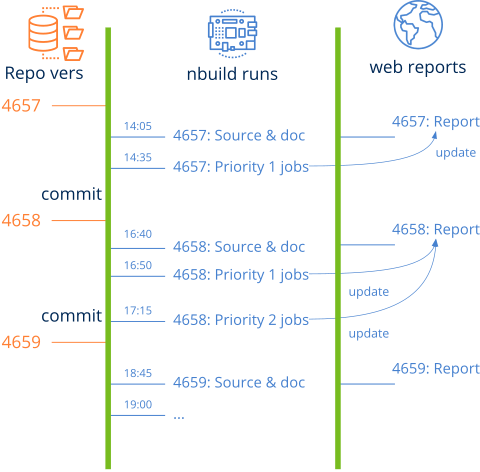

And, finally, the most important thing. We must indicate the compilation jobs that we want to execute within this flow (Listing 8).

1 2 3 4 5 6 7 |

"jobs": [

{"priority" : 1, "name" : "ub24_clang_x64_deb", "config" : "Debug", "tags" : ["ubuntu24", "x64"], "generator" : "Ninja", "opts" : "-DCMAKE_C_COMPILER=clang -DCMAKE_CXX_COMPILER=clang++" },

{"priority" : 1, "name" : "ub24_gcc_x64_dll_rel", "config" : "Release", "tags" : ["ubuntu24", "x64"], "generator" : "Unix Makefiles", "opts" : "-DCMAKE_C_COMPILER=gcc -DCMAKE_CXX_COMPILER=g++ -DNAPPGUI_SHARED=YES" },

{"priority" : 1, "name" : "ub24_gcc_x64_rel", "config" : "Release", "tags" : ["ubuntu24", "x64"], "generator" : "Ninja", "opts" : "-DCMAKE_C_COMPILER=gcc -DCMAKE_CXX_COMPILER=g++" },

{"priority" : 1, "name" : "ub24_clang_x64_dll_deb", "config" : "Debug", "tags" : ["ubuntu24", "x64"], "generator" : "Unix Makefiles", "opts" : "-DCMAKE_C_COMPILER=clang -DCMAKE_CXX_COMPILER=clang++ -DNAPPGUI_SHARED=YES" },

...

]

|

priority: If we have many tasks, it is possible to divide them into groups with increasing priority. In each execution, nbuild will only compile those tasks with higher priority (lower value).name: Name of the task, to be displayed in logs and reports.config: Configuration (Debug,Release, etc).tags: Tags needed to select a correct runner for compilation.generator: CMake generator with which we want to configure the compilation.opts: Additional CMake options needed to configure the compilation.

The flow has been designed to complete the highest priority tasks as quickly as possible, so successive runs of nbuild will complete those lower priority (Figure 3). At times when the code changes frequently, nbuild will focus on those tasks with the highest priority. By doing this we aim to catch any bugs introduced in the latest changes as early as possible. On the contrary, in low activity stages (nights or weekends), the system will gradually complete the low priority tasks, until it covers the whole spectrum of compilations and tests existing in workflow.json.

Different instances of nbuild can be run in parallel, as long as they work on different flows.

8. Additional data

These entries are optional within the workflow.json but it is recommended to include them.

version: File within the repository indicating the version of the software. It should only include one line with the formatmajor.minor.path(1.5.6).build: Path within the final packaging that will contain information about the compiled package.

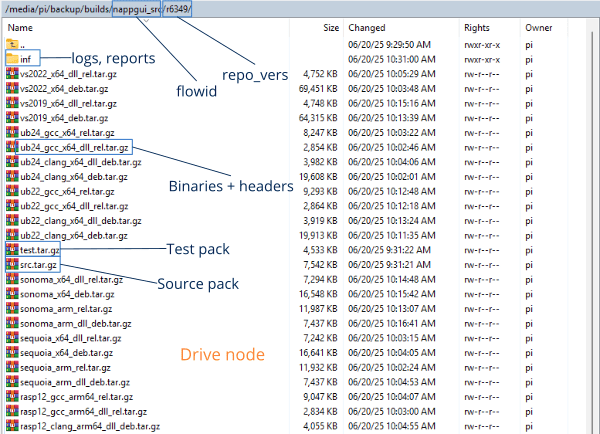

9. Artifact management in drive

As we have already indicated in previous sections, all packages, binaries, logs and reports are stored in a specific node of the network, called drive, endowed with large disk capacity (Figure 4). The information is organized by flow name and repository version (Figure 5).